Corrections

How to build GUM and contribute corrections

If you notice some errors in the GUM corpus, you can contribute corrections in a number of ways. The easiest way is to open an issue in the GUM GitHub repository and describe the error and the file you're seeing it in.

If you would like to contribute corrections yourself (especially if you've done substantial edits rather than catching small errors you can describe), you can do so directly by forking the repository, editing specific files and submitting a pull request. The GUM build bot script will propagate changes to other relevant corpus formats and merge the changes, so it is important to read the following explanation carefully before editing anything.

The build bot is also used to reconstruct reddit data, merging all annotations after plain text data has been restored using _build/process_reddit.py.

Executive Summary (TL;DR)

- only edit files in _build/src/

- edit POS, lemma and TEI tags in _build/src/xml/

- edit dependencies and dependency functions in _build/src/dep/

- edit entities, information status, entity linking and coreference in _build/src/tsv/

- edit RST in _build/src/rst/

- you can't edit constituent trees and CLAWS tags

- you can't edit RST dependencies

- do not alter tokenization or sentence borders unless you know how

Where to correct what

GUM is distributed in a variety of formats which contain partially overlapping information. For example, almost all formats contain part of speech tags, which need to be in sync across formats. This synchronization is ensured by the GUM Build Script. As a result, it's important to know exactly where to correct what.

Overview

Of the many formats available in the GUM repo, only four are actually used to generate the dataset, with other formats being dynamically generated from these. The four main source formats are found under the directory _build/src/ in the sub-directories:

- xml/ - CWB vertical format XML for token annotations and TEI span annotations, incl. date/time expressions

- dep/ - dependency syntax in the 10 column CoNLLU format

- tsv/ - WebAnno 3 tab separated export format for entity, information status and coreference annotations

- rst/ - rhetorical structure theory analyses in the rs3 format as used by rstWeb

Note that the corresponding formats in the full corpus release contain more annotations: for example, lemmas are not included in the CoNLLU files in src/, but will be added automatically from the XML files in the final generated CoNLLU files for each release.

All formats other than these are generated from these files automatically and there is no possibility to edit them (changes will be quashed on the next build process). References to source directories below (e.g. xml/) always refer to these sub-directories (_build/source/xml/).

Starting in GUM version 7, we include a version of constituent parses inside src/const/. Only a small subset of these files has gold standard constituent annotations, and the rest are automatically updated with each release using a state-of-the-art parser relying on gold POS tags. To re-generate const/ files, run the build-bot with the option -p.

Committing your corrections to Github

Because multiple people can contribute corrections simultaneously, merging corrections is managed over github. To contibute corrections directly, you should always:

- Fork the dev branch

- Edit, commit and push to your fork

- Make a pull request into the origin dev branch

Correcting token strings

Token strings come from the first column of the files in xml/. These should normally not be changed. Changing token strings in any other format has no effect (changes will be overwritten or lead to a conflict and crash). To correct whitespace (e.g. CoNLLU SpaceAfter annotations, plain text in CoNLLU files, etc., you can group together tokens which have no whitespace between them by adding <w> tags around the token lines in the .xml files in xml/ (see those files for examples). Trivial contractions (e.g. "don't") do not need to be surrounded by tags and are interpreted as non-whitespace-separated by the build bot by default.

Correcting POS tags and lemmas

GUM contains lemmas and four types of POS tags for every token:

- 'Vanilla' PTB tags following Santorini (1990)

- Extended PTB tags as used by TreeTagger (Schmid 1994)

- CLAWS5 tags as used in the BNC

- Universal POS tags as used in the Univeral Dependencies project

You can correct lemmas and extended PTB tags in the xml/ directory. Vanilla PTB tags are produced fully automatically from the extended tags and should not be corrected. Correct the extended tags instead. CLAWS tags are produced by an automatic tagger, but are post-processed to remove errors based on the gold extended PTB tags. As a result, most CLAWS errors can be corrected by correcting the PTB tags. Direct corrections to CLAWS tags will be destroyed by the build script. If you find a CLAWS error despite a correct PTB tag, please let us know so we can improve post-processing.

Correcting TEI tags in xml/

The XML tags in the xml/ directory are based on the TEI vocabulary. Although the schema for GUM is much simpler than TEI, some nesting restrictions as well as naming conventions apply. Corrections to XML tags can be submitted, however please make sure that the corrected file validates using the XSD schema in the _build directory. Corrections that don't validate will fail to merge. If you feel the schema should be updated to accommodate some correction, please let us know.

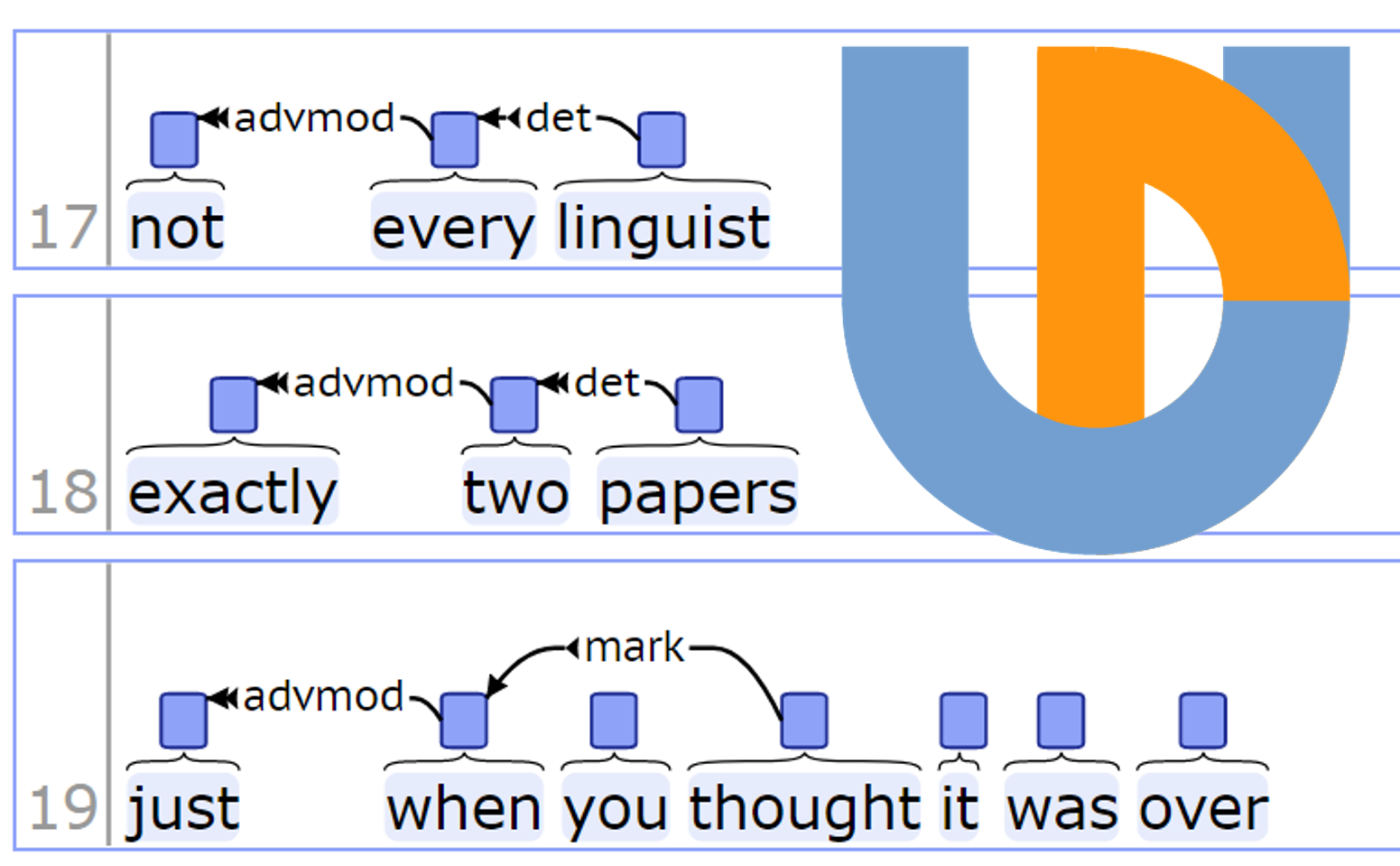

Dependencies

Syntactic dependency information in the src/dep/ directory can be corrected directly there. However note that:

- Only dependency head and function originate in these files

- POS tags and lemmas, as well as sentence type and speaker information come from the xml/ files

- You can't alter tokenization or sentence break information (see below)

const/ - Constituent trees

Constituent trees in src/const/ are generated automatically based on the tokenization, POS tags and sentence breaks from the XML files, and cannot be corrected manually at present. Eight constituent files are also hand-annotated and are never edited by the build-bot. Note that token-less data for reddit documents is included in the release under src/const/ for convenience. This data can be used to restore reddit constituent parses using _build/process_reddit.py without having to re-run the constituent parser.

Syntactic function labels for constituents (e.g. NP-SBJ, PP-DIR, etc.) are projected automatically from the dependency syntax files; if you find a constituent function label error on a correct phrase tree, you cannot correct it manually, but please let us know so we can improve the projection algorithm.

tsv/ - Coreference, entities and wikification

Coreference and entity annotations are available in several formats, but all information is projected from the src/tsv/ directory. You can correct entity types, entity linking (Wikification), information status, and even coreference and bridging edges, though care must be taken that the WebAnno TSV format has been edited correctly.

rst/ - Rhetorical Structure Theory

The rst/ directory contains Rhetorical Structure Theory analyses in the rs3 format. You can edit the rhetorical relations and even make finer grained segments, but:

- You cannot edit the tokenization, which is expressed by spaces inside each segment, but ultimately generated from the XML files from xml/

- By convention, you are not allowed to make segments that contain multiple sentences according to the <s> elements in the XML files

Running the build script

Overview

The build script in _build/ is run like this:

> python build_gum.py [-s SOURCE_DIR] [-t TARGET_DIR] [OPTIONS]

Source and target directories default to _build/src/ and _build/target if not supplied. Parsing and re-tagging CLAWS tags are optional if those data sources are already available and no POS tag, sentence borders or token string changes have occurred. The constituent parser is somewhat complex to re-run and slow if not run on GPU (see the README file in _build/ for more details). See below for more option settings.

The build script runs in three stages:

- Validation:

- check that all source directories have the same number of files

- check that document names match across directories

- check that token count matches across formats

- check that sentence borders match across formats (for xml/ and dep/; the tsv/ sentence borders are adjusted below)

- validate XML files using XSD schema

- Propagation:

- project token forms from xml/ to all formats

- project POS tags and lemmas from xml/ to dep/

- project sentence types and speaker information from xml/ to dep

- adjust sentence buses borders in tsv/

- generate vanilla PTB tags from extended tags

- (optional) rerun CLAWS tagging and correct based on PTB tags and dependencies (requires TreeTagger)

- (optional) re-run constituent parser based on updated POS tags (requires neural constituent parser)

- project constituent function labels from dependency trees to constituent trees

- Convert and merge:

- generate conll coref format

- update version metadata

- merge all data sources using SaltNPepper

- output merged versions of corpus in PAULA and ANNIS formats

- merge entity annotations into CoNLLU format

Options and sample output

Beyond setting different source and target directories, some flags specify optional behaviors:

- -p - Re-parse the data using the neural constituent parser based on current tokens, sentence borders and POS tags (requires neural parser and correct path settings in paths.py)

- -c - Re-tag CLAWS5 tags (requires TreeTagger configured in path settings in paths.py, and the supplied CLAWS training model in utils/treetagger/lib/)

- -v - Verbose Pepper output - useful for debugging the merge step on errors

- -n - No Pepper conversion

- --pepper_only - Just run Pepper conversion after propagation has been run once

A successful run including recovering reddit data, with common options on should look like this:

> python process_reddit.py -m add

Found complete reddit data in utils/get_reddit/cache.txt ...

Compiling raw strings

o Processing 18 files in c:\Uni\Corpora\GUM\github\_build\src\xml\...

o Processing 18 files in c:\Uni\Corpora\GUM\github\_build\src\tsv\...

o Processing 18 files in c:\Uni\Corpora\GUM\github\_build\src\dep\...

o Processing 18 files in c:\Uni\Corpora\GUM\github\_build\src\rst\...

o Processing 18 files in c:\Uni\Corpora\GUM\github\_build\src\..\target\const\...

Completed fetching reddit data.

You can now use build_gum.py to produce all annotation layers.

amir@GITM _build

> python build_gum.py -cv

====================

Validating files...

====================

Found reddit source data

Including reddit data in build

o Found 168 documents

o File names match

o Token counts match across directories

o 168 documents pass XSD validation

Enriching Dependencies:

=======================

168/168: + GUM_whow_skittles.conllu

Enriching XML files:

=======================

o Retrieved fresh CLAWS5 tags

o Enriched xml in 168 documents

Adjusting token and sentence borders:

=====================================

o Adjusted 168 WebAnno TSV files

o Adjusted 168 RST files

i Skipping fresh parses for const/

Compiling Universal Dependencies version:

========================================

o Enriched dependencies in 168 documents

Adding function labels to PTB constituent trees:

========================================

168/168: + GUM_whow_skittles.ptb

Starting pepper conversion:

==============================

Pepper is working... |

i Pepper reports 201 empty xml spans were ignored

i Pepper says:

Conversion ended successfully, required time: 00:02:05.602 s

(In case of errors you can get verbose pepper output using the -v flag)